Globally, data centres consume at least two hundred Terawatt hours (TWh) of electricity per year (about 2% of global energy use). Reducing electricity usage in the data centre results in less environmental impact and lower costs through OPEX (operational expenditure) reductions.

While the demand for digital services has been increasing at a much higher rate than data centre power consumption, due to increased server efficiencies, data centre operators and designers need to implement practices that reduce energy demand to help governments and businesses reach their environmental goals.

Selecting the right server that is designed to efficiently meet the workload requirements within a data centre can affect the overall power consumption. Server systems need to be optimised to perform specific workloads, which reduces excess processing power that is not needed. In addition, some servers are more energy efficient than others due to their design, using some shared components. The latest generations of systems perform significantly more work per watt, which reduces power consumption.

Matching your workload requirements with the right sized system

There are many component choices and configuration options when setting up a server. Traditional general-purpose servers are designed to work for any typical workload, which leads to over-provisioning resources to ensure the system works for the broadest range of applications. A workload-optimised system advances component choices and configuration options to match the requirements for a target set of workloads. These optimisations reduce unnecessary functionality, which reduces cost, but also reduces power consumption and heat generation. The savings are significant when you consider that the system will be scaled to hundreds, thousands, or even tens of thousands of systems.

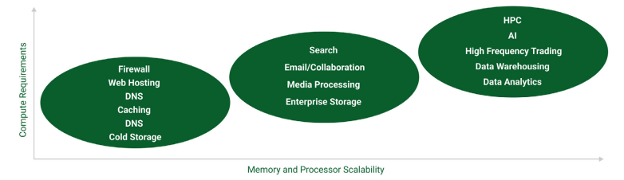

Different server system types are designed for different workloads, for example, servers with more CPUs, cooling capacity, memory capacity, I/O capacity, or networking performance. For instance, HPC applications require fast CPUs, while content delivery networks need massive I/O capabilities. Using the server type that is designed for the workload reduces the excess capacity, resulting in a cost reduction.

Demonstration of how different workloads require varying amounts of capability and scalability

Improving multi-node and blade efficiency

Server systems can be designed to share resources, which can lead to better overall efficiency. For example, sharing power supplies or fans among several nodes reduces the need to duplicate these components for each node. Multi-node systems can share power supplies and fans, delivering an efficiency advantage. The result is that larger fans and more efficient power supplies can be used, cutting down the electricity consumption when all nodes are running applications.

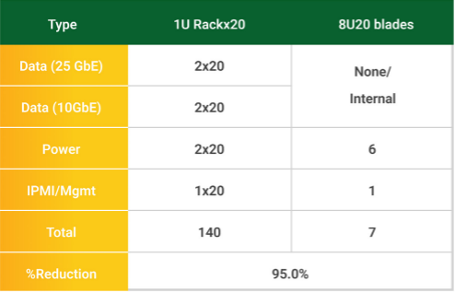

Another example of saving resources is to use a system where multiple independent servers can share networking in addition to the power supplies and fans. With higher-density computing and integrated switches with shared power supplies, the required rack space can be reduced in addition to a significant amount of cables.

Another method that reduces energy usage when air cooling a server is to be aware of and diminish cabling issues. Power and network cables that block airflow require the fans to operate at a higher RPM, using more electricity. Careful placement of these cables within the chassis and external to the chassis reduces this possible issue. Additionally, a server that consists of blades with integrated switching will typically have fewer cables connecting systems, as this is done through a backplane.

By comparing 20 1U rackmount servers to a modern blade system in an 8U chassis with 20 blades, there is a 95% reduction in the number of cables, which can contribute to improved airflow and less electricity used by the system in terms of fan speed.

Selecting and optimising key server system components

There are a number of decisions that can be made when purchasing new server hardware. With the advancements in CPU and GPU design, the choices are not just which hardware to acquire, but will expand to matching the workload and the SLAs to the specific model. This also has the effect of reducing the power consumption when running applications.

Performance/watt

As CPU technology constantly improves, one of the most critical gains is that more work per watt is accomplished with each generation of CPUs and GPUs. The most recent offerings by Intel and AMD are up to three times more performant in terms of the work produced per watt consumed. This technological marvel has enormous benefits for data centres that wish to offer more services with constant or cut down power requirements.

CPU differences

CPUs for servers and workstations are available in many different configurations. The number of cores per CPU, the processor clock rate, the power draw, the boost clock speed, and the amount of cache generally categorise CPUs. The number of cores and clock rate are generally related to the amount of electricity used. Higher numbers of cores and clock rates will usually require more electricity delivered and will run hotter. Conversely, the lower number of cores and clock rates will use less power and run cooler.

For example, suppose a workload is not required to be completed in a defined amount of time. A server with lower-powered CPUs (generally related to performance) can be used compared to a higher-powered system when the SLA may be more stringent. An email server would be one such instance. The response time to view or download emails to a client device needs to be interactive, but a slower and less power-demanding CPU could be used since the bottlenecks would be storage and networking.

On the other hand, a higher performing and a higher energy CPU would be appropriate for a database environment where data may need to be analysed quickly. While putting an email server onto a high-performing system would not cause harm, the system will not be used for its intended purpose.

Accelerators

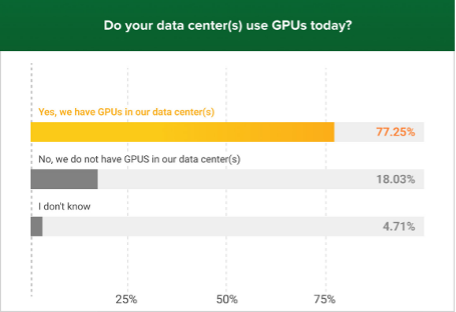

Today’s computing environments are becoming more heterogeneous. Accelerators are available to increase the performance of specific tasks, even while CPU performance has increased exponentially over the past few years. A recent Supermicro survey of over 400 IT professionals and data centre managers found that a high number of operators are using GPUs today, which, when used for highly parallel algorithms, can reduce run time significantly, lowering overall power costs.

SSD vs. HDD

Hard Disk Drives (HDD) have been the primary storage method for over 50 years. While the capacity of HDDs in recent years has increased dramatically, the access time has remained relatively constant. Throughput has increased over time, as has the capacities of HDDs. However, Solid State Drives (SDD) are faster for data retrieval and use less power than HDDs, although HDDs are suitable for longer-term storage within an enterprise. M.2 NVMe and SSD drives currently transfer about 3GB/sec, which can significantly reduce the time required to complete a heavily I/O application. Overall, this performance will result in lower energy consumption for the time to complete a task compared to other I/O technologies.

Boosting refresh cycles of server system components

The major components of servers are continually improving in terms of price and performance. As applications continue to use more data, whether for AI training, increased resolution, or more I/O (as with content delivery networks), the latest servers that contain the most advanced CPUs, memory, accelerators, and networking may be required.

However, each of the sub-systems evolves at a different rate. With refresh cycles decreasing from five and three years, according to some estimates, entire servers do not need to be discarded, contributing to electronic waste. With a disaggregated approach, the components or sub-systems of a server can be replaced when newer technology is deployed. A well designed chassis will be able to accommodate a number of electronic component technology cycles, which allows for component replacement. By designing a chassis for future increases in the power required for CPUs or GPUs, the chassis will not have to be discarded as new CPUs are made available.

Servers can be designed to accept a range of CPUs and GPUs. With certain server designs, new components can be replaced, cutting down the e-waste associated with sending an entire server to a landfill or even to a certified recycling specialist.

Ultimately, there are several ways to reduce power consumption at the server level. Energy consumption can be reduced by looking for the most application-optimised servers with the technology needed to perform specific workloads. Using multi-node or blade servers that share some components can also reduce power usage. It’s important to understand system technology and learn about the right system for the right job.