For many years the industry has been in a deep discussion about the concept of edge computing. Yet the definition varies from vendor to vendor, creating confusion in the market, especially where end-users are concerned.

In fact, within more traditional or conservative sectors, some customers are yet to truly understand how the edge relates to them, meaning the discussion needs to change, and fast.

According to Gartner, “the edge is the physical location where things and people connect with the networked, digital world, and by 2022, more than 50% of enterprise-generated data will be created and processed outside the data centre or cloud.” All of this data invariably needs a home, and depending on the type of data that is secured, whether it’s business or mission-critical, the design and location of its home will vary.

Autonomous vehicles are but one example of an automated, low- latency and data-dependent application. The real-time control data required to operate the vehicle is created, processed and stored via two-way communications at a number of local and roadside levels. On a city-wide basis, the data produced by each autonomous vehicle will be processed, analysed, stored and transmitted in real-time, in order to safely direct the vehicle and manage the traffic. Yet on a national level, the data produced by millions of AVs could be used to shape transport infrastructure policy and redefine the automotive landscape globally.

Each of these processing, analysis, and storage locations requires a different type of facility to support its demand. Right now, data centres designed to meet the needs of standard or enterprise business applications are plentiful. However, data centres designed for dynamic, real-time data delivery, provisioning, processing, and storage are in short supply.

That’s partly because of the uncertainty over which applications will demand such infrastructure and, importantly, over what sort of timeframe. However, there’s also the question of flexibility. Many of the existing micro data centre solutions are unable to meet the demands of edge or, more accurately, localised, low-latency applications, which also require high levels of agility and scalability. This is due to their pre-determined or specified approach to design and infrastructure components.

Traditionally, the market has been met with small-scale, edge applications, which have been deployed in pre-populated, containerised solutions. A customer is often required to confirm to a standard shape or size and there’s no flexibility in terms of their modularity, components, or make-up. So how do we change the thinking?

A flexible edge

Standardisation has, in many respects, been crucial to our industry. It offers a number of key benefits, including the ability to replicate systems predictably across multiple locations. But when it comes to the edge, some standardised systems aren’t built for the customer – they’re a product of vendor collaboration: one that’s also accompanied by high-costs and long lead times.

On the one hand, having a box with everything in it can undoubtedly solve some pain points, especially where integration is concerned. But what happens if the customer has its own alliances, or may not need all of the components? What happens if they run out of capacity in one site? Those original promises of scalability, or flexibility disappear, leaving the customer with just one option – to buy another container. One might consider that that rigidity, when it comes to ‘standardisation’, can often be detrimental to the customer.

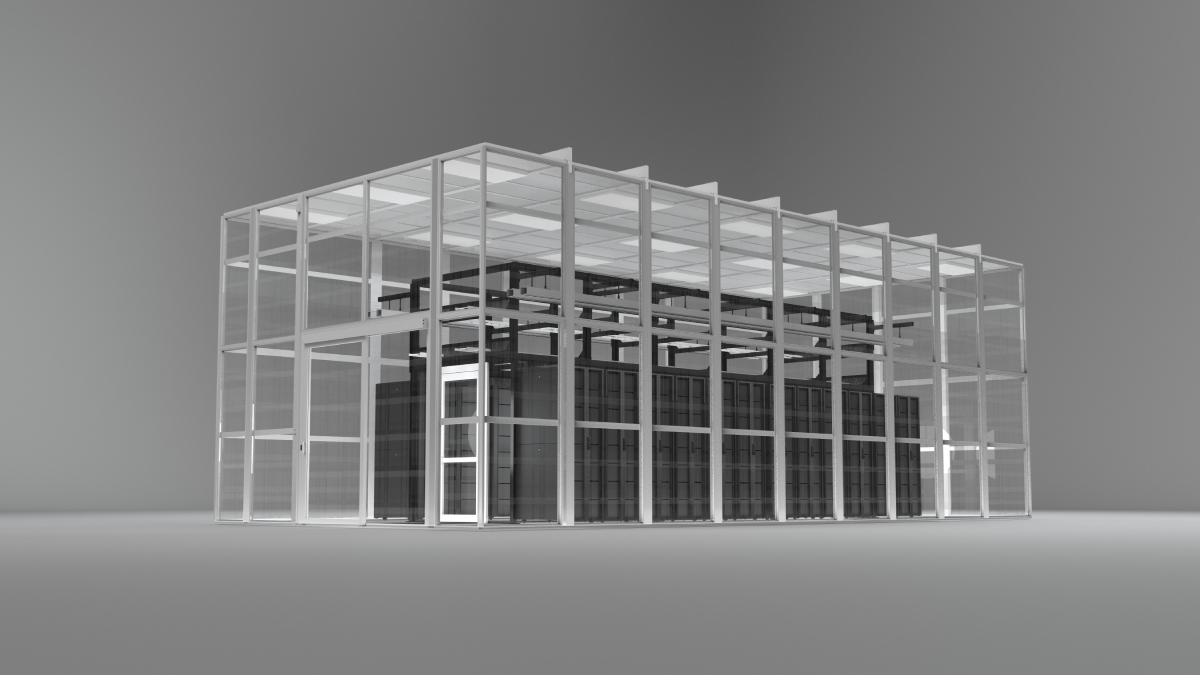

There is, however, the possibility that such modular, customisable, and scalable micro data centre architectures can meet the end-user’s requirements perfectly, allowing end-users to truly define and embrace their edge.

Is there a simpler way?

Today forecasting growth is a key challenge for customers. With demands increasing to support a rapidly developing digital landscape, many will have a reasonable idea of what capacity is required today. But predicting how it will grow over time is far more difficult, and this is where modularity is key.

For example, pre-pandemic, a content delivery network, with capacity located near large users groups may have found itself swamped with demand in the days of lockdown. Today, they may be considering how to scale up local data centre capacity quickly and incrementally to meet customer expectations, without deploying additional infrastructure across more sites.

There is also the potential of 5G-enabled applications, so how does one define what’s truly needed to optimise and protect the infrastructure in a manufacturing environment. Should an end-user purchase a containerised micro data centre because that’s what’s positioned as the ideal solution? Or, should they customise and engineer a solution that can grow incrementally with demands? Or would it be more beneficial to deploy a single room that offers a secure, high-strength, and walk-able roof that can host production equipment?

The point here is that when it comes to micro data centres, a one-size-fits-all approach does not work. End-users need the ability to choose their infrastructure based on their business demands – whether they be in industrial manufacturing, automotive, telco, or colocation environments. But how can users achieve this?

Infrastructure agnostic architectures

At Subzero Engineering, we believe that vendor-agnostic, flexible micro data centres are the future for the industry. For years we’ve been adding value to customers, and building containment systems around their needs, without forcing their infrastructure to fit into boxes.

We believe users should have the flexibility to utilise their choice of best-in-class data centre components, including the IT stack, the uninterruptible power supply (UPS), cooling architecture, racks, cabling, or fire suppression system. So by taking an infrastructure-agnostic approach, we give customers the ability to define their edge, and use resilient, standardised, and scalable infrastructure in a way that’s truly beneficial to their business.

This approach alone offers significant benefits, including a 20-30% cost-saving, compared with conventional ‘pre-integrated’, micro data centre designs.

For too long now, our industry has been shaped by vendors that have forced customers to base decisions on systems which are constrained by the solutions they offer. We believe now is the time to disrupt the market, eliminate this misalignment, and enable customers to define their edge as they go.

By providing customers with the physical data centre infrastructure they need, no matter their requirements, we can help them plan for tomorrow. As I said, standardisation can offer many benefits, but not when it’s detrimental to the customer.